Parameter vs. Statistic: Unveiling the Key Differences in Data Analysis

Navigating the world of data analysis can feel like traversing a complex maze. Two terms that often cause confusion, even for seasoned analysts, are “parameter” and “statistic.” While both relate to describing and understanding data, they represent fundamentally different concepts. Understanding the nuances between a parameter and a statistic is crucial for drawing accurate conclusions, making informed decisions, and avoiding costly errors in research and practical applications. This comprehensive guide will dissect the core differences between these two terms, exploring their significance, practical applications, and potential pitfalls. We’ll delve into real-world examples and clarify any lingering confusion, ensuring you have a firm grasp on these essential statistical concepts. By the end, you’ll be equipped to confidently differentiate between parameters and statistics and apply them effectively in your own data analysis endeavors.

Decoding Parameters: The True Population Value

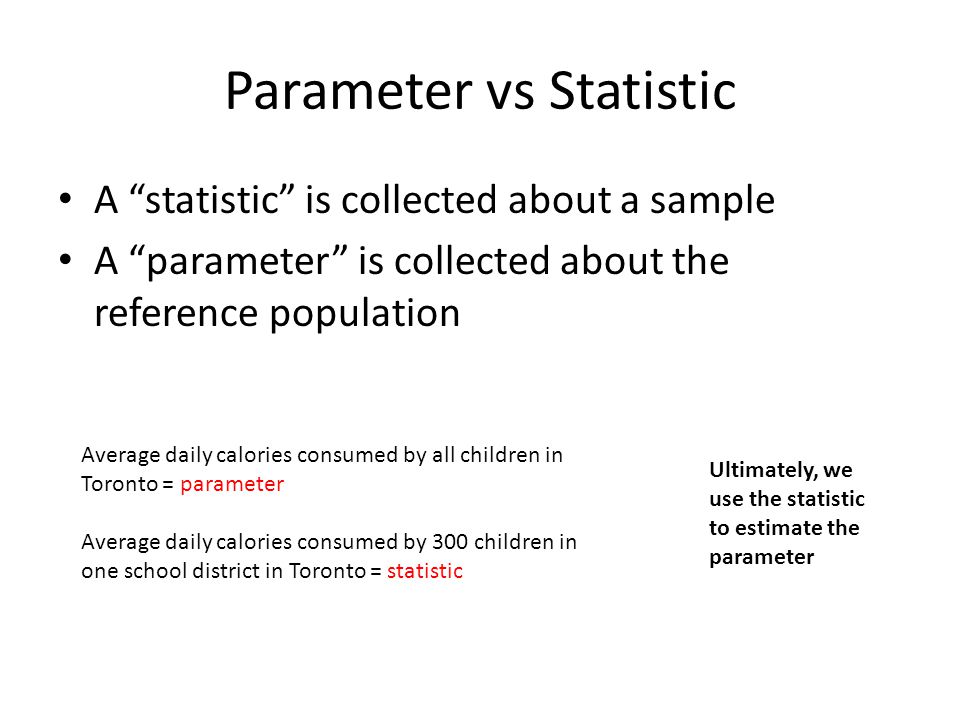

A parameter is a numerical value that describes a characteristic of an entire population. Think of it as the definitive answer when you could ask every single member of a group a question and calculate the result. Because it’s based on the entire population, a parameter is generally considered a fixed, albeit often unknown, value. Obtaining the exact value of a parameter often requires a census, which is rarely feasible or practical, especially with large populations.

Understanding Population Parameters

The key to understanding parameters lies in recognizing that they represent the entire population. Here are a few essential points:

- Complete Enumeration: A parameter is calculated from every individual or item within the population.

- Fixed Value: The parameter is a constant value that doesn’t change (unless the population itself changes).

- Often Unknown: In most real-world scenarios, it’s impossible or impractical to measure the parameter directly due to the size or inaccessibility of the population.

Examples of Parameters

To illustrate the concept of a parameter, consider these examples:

- The average height of all adult women in the United States: If we could measure the height of every adult woman in the US and calculate the average, that would be a population parameter.

- The proportion of defective items produced by a manufacturing plant in a given year: If we inspected every single item produced, we could determine the true proportion of defective items – a parameter.

- The mean income of all households in Canada: Obtaining the income of every household and calculating the average would give us the population parameter for mean household income.

Why Parameters Matter

While often unobservable, parameters are the holy grail of statistical inference. They represent the true state of the population and serve as the ultimate target of our estimations. Understanding the concept of a parameter helps us frame our research questions and interpret our findings accurately. Parameters provide a benchmark for evaluating the accuracy and reliability of our statistical inferences.

Understanding Statistics: Estimates from Samples

A statistic, on the other hand, is a numerical value that describes a characteristic of a sample. A sample is a subset of the population. Statistics are used to estimate population parameters when it’s impossible or impractical to study the entire population. Unlike parameters, statistics are not fixed values; they vary from sample to sample. This variability is a crucial aspect of statistical inference and is quantified by concepts like standard error and confidence intervals.

Key Characteristics of Statistics

Here’s what defines a statistic:

- Based on a Sample: A statistic is calculated from a subset of the population.

- Variable Value: The value of a statistic changes depending on the specific sample selected.

- Used for Estimation: Statistics are used to estimate unknown population parameters.

Examples of Statistics

Consider these examples of statistics:

- The average height of a random sample of 100 adult women in the United States: This is a statistic that can be used to estimate the average height of all adult women in the US (the parameter).

- The proportion of defective items in a batch of 500 items selected from a manufacturing plant’s production: This statistic can be used to estimate the overall proportion of defective items produced by the plant (the parameter).

- The mean income of a survey of 1,000 households in Canada: This statistic is an estimate of the mean income of all households in Canada (the parameter).

The Role of Statistics in Inference

Statistics are the workhorses of statistical inference. They allow us to make educated guesses about population parameters based on limited data. The process of statistical inference involves using sample statistics to draw conclusions about the broader population. This process relies on probability theory and statistical distributions to quantify the uncertainty associated with our estimates. The goal is to provide the *best* estimate possible, given the limitations of the sample data.

Parameter vs. Statistic: Key Distinctions

The table below summarizes the key differences between parameters and statistics:

| Feature | Parameter | Statistic |

|---|---|---|

| Definition | Describes a characteristic of a population | Describes a characteristic of a sample |

| Data Source | Entire population | Subset of the population (sample) |

| Value | Fixed and constant (usually unknown) | Variable (depends on the sample) |

| Purpose | To describe the true population value | To estimate the population parameter |

The Importance of Random Sampling

The accuracy of a statistic as an estimate of a parameter depends heavily on the quality of the sampling method. Random sampling is a cornerstone of statistical inference because it ensures that every member of the population has an equal chance of being selected for the sample. This helps to minimize bias and ensures that the sample is representative of the population. While perfect random sampling is often difficult to achieve in practice, striving for it is crucial for obtaining reliable statistical estimates.

Types of Sampling Methods

Several sampling methods exist, each with its strengths and weaknesses. Some common methods include:

- Simple Random Sampling: Every member of the population has an equal chance of being selected.

- Stratified Sampling: The population is divided into subgroups (strata), and a random sample is taken from each stratum.

- Cluster Sampling: The population is divided into clusters, and a random sample of clusters is selected. All members of the selected clusters are included in the sample.

- Systematic Sampling: Every nth member of the population is selected.

The choice of sampling method depends on the specific research question, the characteristics of the population, and the available resources. Careful consideration of the sampling method is essential for ensuring the validity of statistical inferences.

Statistical Inference: Bridging the Gap

Statistical inference is the process of using sample statistics to make generalizations about population parameters. This process involves several key steps:

- Formulating a Hypothesis: State a specific claim about the population parameter.

- Collecting Data: Obtain a representative sample from the population.

- Calculating Statistics: Calculate relevant statistics from the sample data.

- Assessing Evidence: Use statistical tests to determine the strength of the evidence against the hypothesis.

- Drawing Conclusions: Make a decision about whether to reject or fail to reject the hypothesis.

Statistical inference is a powerful tool for making decisions based on incomplete information. However, it’s important to recognize that statistical inferences are always subject to some degree of uncertainty. This uncertainty is quantified by concepts like p-values, confidence intervals, and margins of error.

Common Misconceptions and Pitfalls

Several common misconceptions can lead to errors in statistical analysis. Here are a few to watch out for:

- Confusing statistics with parameters: Always be clear about whether you are describing a sample or the entire population.

- Assuming a sample is perfectly representative: No sample is ever perfectly representative of the population. Be aware of potential biases and limitations.

- Overgeneralizing from small samples: Small samples provide less information about the population and can lead to unreliable inferences.

- Ignoring sampling bias: Non-random sampling methods can introduce bias and invalidate statistical inferences.

Avoiding these pitfalls requires a solid understanding of statistical principles and careful attention to detail throughout the research process.

Practical Applications in Different Fields

The distinction between parameters and statistics is crucial in various fields:

- Healthcare: Researchers use sample statistics to estimate the effectiveness of new treatments for a population of patients.

- Marketing: Marketers use sample surveys to estimate customer preferences for a target market.

- Finance: Analysts use historical data (a sample) to estimate future stock prices (a parameter, albeit a theoretical one).

- Politics: Pollsters use sample polls to estimate voter preferences in an election.

In each of these fields, understanding the limitations of sample data and the potential for error is essential for making informed decisions.

The Role of Technology in Statistical Analysis

Modern statistical software packages have revolutionized the field of data analysis. These tools allow researchers to perform complex calculations, visualize data, and conduct statistical inferences with ease. Popular software packages include R, Python (with libraries like NumPy and SciPy), SPSS, and SAS. However, it’s crucial to remember that technology is just a tool. A solid understanding of statistical principles is still essential for interpreting results and drawing meaningful conclusions. As expert data scientists, we’ve seen firsthand how easy it is to misinterpret outputs without a firm understanding of the underlying statistical concepts.

Advanced Concepts: Bayesian vs. Frequentist Approaches

The interpretation of parameters and statistics can also depend on the statistical framework being used. Two prominent frameworks are Bayesian and Frequentist statistics.

- Frequentist Statistics: In the frequentist approach, parameters are considered fixed but unknown constants. Statistical inference focuses on the long-run frequency of events. Hypothesis testing and confidence intervals are key tools.

- Bayesian Statistics: In the Bayesian approach, parameters are treated as random variables with probability distributions. Prior beliefs about the parameter are combined with sample data to update the probability distribution. Bayesian inference focuses on calculating posterior probabilities.

The choice between Bayesian and Frequentist approaches depends on the specific research question and the available information. Both frameworks offer valuable insights, but they differ in their underlying assumptions and interpretations.

Navigating Data Analysis: The Path Forward

Mastering the concepts of parameters and statistics is a journey that requires continuous learning and practice. By understanding the fundamental differences between these two terms, you can avoid common pitfalls, interpret statistical results accurately, and make informed decisions based on data. As you delve deeper into the world of data analysis, remember that statistics are tools for understanding the world around us. Use them wisely, critically, and ethically.

Reflecting on Parameter vs. Statistic and Their Impact on Data Insights

Distinguishing between a parameter and a statistic is more than just an academic exercise; it’s a fundamental skill for anyone working with data. By understanding what each represents – the true population value versus an estimate from a sample – you can critically evaluate research findings, interpret data visualizations accurately, and make sound decisions based on evidence. This distinction underpins the very foundation of statistical inference and helps ensure the reliability and validity of your conclusions. Whether you’re a student, researcher, or data professional, a clear grasp of these concepts will empower you to unlock the true potential of data and make a meaningful impact in your field. So, continue to explore, question, and refine your understanding of these essential statistical building blocks, and you’ll be well-equipped to navigate the ever-evolving landscape of data analysis.