Decoding the 429 Status Code: Understanding Rate Limiting and How to Respond

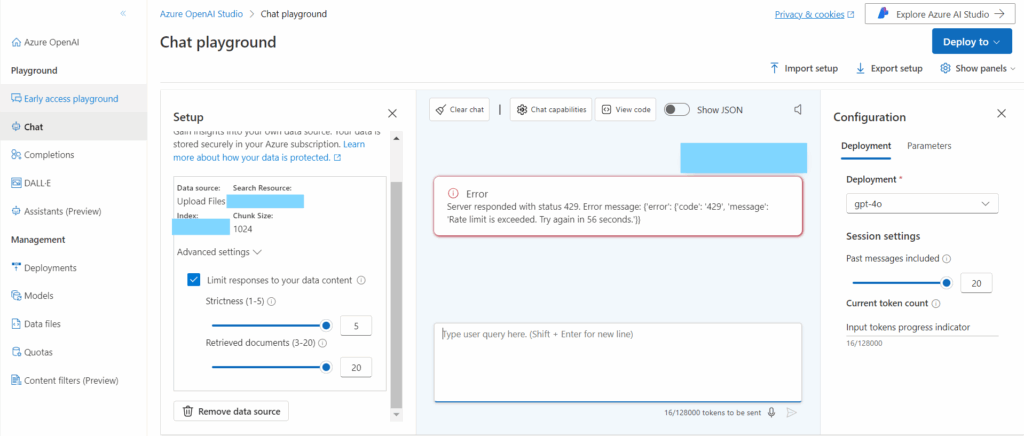

Encountering a ‘429 Too Many Requests’ error can be frustrating for both website users and developers. This HTTP status code signals that a user has sent too many requests in a given amount of time, triggering a rate-limiting mechanism on the server. Understanding the 429 status code, its causes, and how to handle it effectively is crucial for maintaining a smooth user experience and preventing server overload. This comprehensive guide will delve into the intricacies of the 429 status code, offering practical solutions and insights for both developers and end-users.

What is the 429 Status Code? A Deep Dive

The 429 status code, officially named “Too Many Requests,” is an HTTP response code indicating that the user has sent too many requests in a given timeframe. Servers use this code as a form of rate limiting, a technique to protect themselves from abuse, whether intentional (like a DDoS attack) or unintentional (like a faulty script repeatedly requesting data).

Unlike some other HTTP error codes, the 429 is specifically designed to address excessive requests. It’s a signal for the client to slow down and try again later. The server often includes a ‘Retry-After’ header in the response, indicating how long the client should wait before making another request. Ignoring this header can lead to continued blocking and a degraded experience.

The core concept behind the 429 status code is fairness and resource management. By limiting the number of requests a single user or client can make, the server ensures that resources are available for all users, preventing any one individual from monopolizing the system. This is especially important for APIs, which are often subject to high traffic and potential abuse.

The 429 status code is a crucial part of modern web architecture, designed to maintain stability and prevent abuse. It’s not just about preventing malicious attacks; it’s also about managing unintentional overuse and ensuring a positive user experience for everyone.

The Role of Rate Limiting in Web Security and Stability

Rate limiting, the practice of restricting the number of requests a user can make to a server within a specific timeframe, is a cornerstone of web security and stability. It serves multiple crucial purposes:

- Preventing Denial-of-Service (DoS) and Distributed Denial-of-Service (DDoS) Attacks: By limiting the rate of requests, servers can mitigate the impact of malicious attacks aimed at overwhelming the system.

- Protecting Against Brute-Force Attacks: Rate limiting can slow down or prevent attackers from attempting to guess passwords or other sensitive information through repeated login attempts.

- Ensuring Fair Resource Allocation: By limiting the number of requests from any single user, rate limiting ensures that resources are available for all users, preventing any one individual from monopolizing the system.

- Preventing Accidental Overload: Sometimes, legitimate users or faulty scripts can unintentionally generate excessive requests. Rate limiting can prevent these situations from causing server instability.

- Cost Management: For services that charge based on usage, rate limiting can help control costs by preventing users from exceeding their allocated resources.

Different rate-limiting algorithms exist, each with its own strengths and weaknesses. Common methods include:

- Token Bucket: A virtual bucket holds a certain number of tokens, representing the number of requests a user can make. Each request consumes a token, and the bucket is refilled at a specific rate.

- Leaky Bucket: Requests are added to a queue (the bucket), and they are processed at a fixed rate. If the queue is full, new requests are dropped.

- Fixed Window Counter: A counter tracks the number of requests within a fixed time window. Once the window expires, the counter is reset.

- Sliding Window Log: This method maintains a log of recent requests. When a new request arrives, the system checks the log to see if the user has exceeded the rate limit.

The choice of rate-limiting algorithm depends on the specific needs of the application. Factors to consider include the desired level of accuracy, the performance impact, and the complexity of implementation.

Common Causes of the 429 Error

Understanding the root causes of a 429 error is the first step in resolving it. The error can stem from various sources, both on the client and server side.

- Exceeding API Rate Limits: Many APIs impose rate limits to prevent abuse and ensure fair usage. If your application exceeds these limits, you will receive a 429 error.

- Excessive Web Scraping: Web scraping involves automatically extracting data from websites. If a scraper sends too many requests in a short period, the website may block it with a 429 error.

- Faulty Scripts or Applications: Bugs in your code can sometimes cause applications to send an excessive number of requests to a server.

- User Behavior: In some cases, normal user behavior can trigger a 429 error, especially if the user is interacting with a resource-intensive application or website.

- Shared IP Address: If multiple users share the same IP address (e.g., behind a NAT gateway), their combined requests can exceed the rate limit for that IP address.

- DDoS Attack Mitigation: Servers under attack might aggressively rate limit requests to protect themselves, leading to 429 errors for legitimate users.

Identifying the specific cause of the 429 error is crucial for implementing the appropriate solution. Monitoring your application’s request patterns and API usage can help pinpoint the source of the problem.

How to Resolve the 429 Status Code: Strategies for Developers and Users

Resolving a 429 error requires a different approach depending on whether you are a developer or an end-user. Here are some strategies for both:

For Developers:

- Implement Exponential Backoff: This technique involves gradually increasing the delay between retries. For example, if the first retry is after 1 second, the second retry could be after 2 seconds, the third after 4 seconds, and so on. This helps avoid overwhelming the server.

- Respect the Retry-After Header: The server often includes a ‘Retry-After’ header in the 429 response. Always respect this header and wait the specified amount of time before making another request.

- Optimize API Usage: Review your application’s API usage and identify areas where you can reduce the number of requests. Consider caching data, batching requests, or using more efficient API endpoints.

- Implement Queuing: Use a queue to buffer requests and send them at a controlled rate. This can help smooth out spikes in traffic and prevent exceeding rate limits.

- Monitor API Usage: Track your application’s API usage and set up alerts to notify you when you are approaching rate limits. This allows you to proactively address potential issues before they cause problems.

- Use API Keys Effectively: Ensure that your application is using API keys correctly and that each key is associated with a specific user or application. This allows the server to accurately track and manage rate limits.

- Implement Client-Side Rate Limiting: Consider implementing rate limiting on the client side to prevent your application from sending too many requests in the first place.

For End-Users:

- Wait and Retry: The simplest solution is often to simply wait a few minutes and try again. The server may have temporarily rate-limited your requests.

- Clear Browser Cache and Cookies: Sometimes, cached data or cookies can interfere with your ability to access a website. Clearing your browser’s cache and cookies can resolve the issue.

- Try a Different Browser or Device: In some cases, the problem may be specific to your browser or device. Trying a different browser or device can help determine if this is the case.

- Contact Support: If you continue to experience the 429 error, contact the website’s support team for assistance. They may be able to provide more information about the rate limits and how to avoid them.

- Check Your Internet Connection: A flaky or unreliable internet connection can sometimes cause excessive requests. Ensure that your internet connection is stable.

- Disable Browser Extensions: Some browser extensions can interfere with website functionality and cause excessive requests. Try disabling your browser extensions to see if this resolves the issue.

Real-World Examples of 429 Status Code in Action

The 429 status code is commonly used by various online services and APIs to protect their infrastructure. Here are a few real-world examples:

- Twitter API: The Twitter API uses rate limiting to prevent abuse and ensure fair access to its data. Developers who exceed the rate limits will receive a 429 error.

- GitHub API: The GitHub API also employs rate limiting to protect its servers. Different API endpoints have different rate limits, and developers need to be aware of these limits to avoid 429 errors.

- E-commerce Platforms: E-commerce platforms often use rate limiting to protect against bots that attempt to scrape product data or place fraudulent orders.

- Cloud Storage Services: Cloud storage services like AWS S3 and Google Cloud Storage use rate limiting to prevent users from overwhelming their storage infrastructure.

- Social Media Platforms: Social media platforms like Facebook and Instagram use rate limiting to protect against spam and abuse.

These examples illustrate the importance of understanding and respecting rate limits when interacting with online services and APIs. Ignoring these limits can lead to 429 errors and a degraded user experience.

Advanced Techniques for Handling Rate Limiting in Complex Systems

In complex systems, handling rate limiting effectively requires more sophisticated techniques. Here are some advanced strategies:

- Distributed Rate Limiting: In a distributed system, rate limiting needs to be coordinated across multiple servers. This can be achieved using a centralized rate-limiting service or a distributed consensus algorithm.

- Adaptive Rate Limiting: Adaptive rate limiting involves dynamically adjusting rate limits based on real-time traffic patterns and server load. This can help optimize resource utilization and prevent overload.

- Priority-Based Rate Limiting: Priority-based rate limiting allows you to prioritize certain types of requests over others. For example, you might prioritize requests from paying customers over requests from free users.

- Token Bucket with Burst Capacity: This technique allows users to exceed the rate limit for a short period of time, up to a certain burst capacity. This can be useful for handling occasional spikes in traffic.

- Client-Side Throttling: Implementing throttling on the client-side can help prevent your application from overwhelming the server. This involves delaying or dropping requests based on the current rate limit.

- Circuit Breaker Pattern: The circuit breaker pattern can be used to automatically disable API calls when the rate limit is consistently exceeded. This can help prevent cascading failures and improve system resilience.

These advanced techniques can help you handle rate limiting more effectively in complex systems, ensuring that your application remains responsive and reliable even under heavy load.

The Future of Rate Limiting: Trends and Innovations

Rate limiting is an evolving field, with new trends and innovations emerging all the time. Here are some key areas to watch:

- AI-Powered Rate Limiting: Machine learning algorithms can be used to dynamically adjust rate limits based on real-time traffic patterns and user behavior. This can help optimize resource utilization and prevent abuse more effectively.

- Blockchain-Based Rate Limiting: Blockchain technology can be used to create a decentralized rate-limiting system that is resistant to censorship and manipulation.

- Standardized Rate-Limiting Protocols: The development of standardized rate-limiting protocols would make it easier for developers to integrate with different APIs and services.

- Edge Computing for Rate Limiting: Moving rate-limiting logic to the edge of the network can reduce latency and improve performance.

- Integration with Identity Management Systems: Integrating rate limiting with identity management systems can provide more granular control over access to resources.

As the web continues to evolve, rate limiting will become an increasingly important tool for protecting online services and APIs. Staying up-to-date with the latest trends and innovations in this field is crucial for building secure and reliable applications.

Understanding Rate Limits Protects Website Stability

The 429 status code is more than just an error message; it’s a vital mechanism for maintaining the stability and security of web services. By understanding its causes and implementing appropriate solutions, developers can ensure a smooth user experience, while end-users can learn how to avoid triggering these limits. As rate-limiting techniques continue to evolve, staying informed about best practices and emerging trends is essential for navigating the complexities of modern web architecture. Share your experiences with the 429 status code in the comments below, and let’s continue to learn and improve together.